Today's links

- A weekend's worth of links: Short hits for a long weekend.

- Object permanence: Floppy disk CD sleeves; Rules for radicals; California's preventable fires; Muppet Haunted Mansion; Wells Fargo steals rescued Nazi loot; Texas abortion release.

- Upcoming appearances: Where to find me.

- Recent appearances: Where I've been.

- Latest books: You keep readin' em, I'll keep writin' 'em.

- Upcoming books: Like I said, I'll keep writin' 'em.

- Colophon: All the rest.

A weekend's worth of links (permalink)

Did you know that it's possible to cut a hole in any cube such that an identical cube can fit inside it? Really! It's called "Rupert's Property." Further, all Platonic solids are Rupert! Except one, newly discovered shape, which cannot fit inside itself. What is this eldritch polygon called? A Noperthedron!

https://arxiv.org/pdf/2508.18475

"Noperthedron" is the best coinage I've heard in months, which makes it a natural to open this week's linkdump, a collection of the links that piled up this week without making it into my newsletter. This is my 33d Saturday linkdump – here's the previous 32 editions:

https://pluralistic.net/tag/linkdump/

Speaking of eldritch geometry? Perhaps you've heard that Donald Trump plans to add a 90,000 sqft ballroom to the (55,000 sqft) White House. As Kate "McMansion Hell" Wagner writes for The Nation, this is a totally bullshit story floated by Trump and a notorious reactionary starchitect, and to call it a "plan" is to do unforgiveable violence to the noble art of planning:

https://www.thenation.com/article/culture/white-house-ballroom-mccrery-postmodernism/

Wagner is both my favorite architecture critic and the only architecture critic I read. That's because she's every bit as talented a writer as she is a perspicacious architecture critic. What's more, she's a versatile writer. She doesn't just write these sober-but-scathing, erudite pieces for The Nation; she has, for many years, invented the genre of snarky Zillow annotations, which are convulsively funny and trenchant:

At the Electronic Frontier Foundation, we often find ourselves at the center of in big political legal fights; for example, we were the first group to sue Musk and DOGE:

https://www.eff.org/press/releases/eff-sues-opm-doge-and-musk-endangering-privacy-millions

Knowing that I'm part of this stuff helps me get through tough times – but I'm also so glad that we get to step in and defend brilliant writers like Wagner, as we did a few years ago, when Zillow tried to use legal bullying tactics to make her stop being mean to their shitty houses:

https://www.eff.org/deeplinks/2017/06/mcmansion-hell-responds-zillows-unfounded-legal-claims

If this kind of stuff excites you as much as it excites me and you're in the Bay Area, get thee to the EFF Awards (or tune into the livestream) and watch us honor this year's winners: Just Futures Law, Erie Meyer, and the Software Freedom Law Center, India:

So much of the activity that EFF defends involves writing. The web was written into existence, after all, both by the coders who hacked it together and the writers who filled it up. I've always wanted to be a writer, since I was six years old, and I'm so lucky to have grown up through an era is which the significance of the written word has continuously expanded.

I was equally lucky to have writing teachers who permanently, profoundly shaped my relationship with the written word. I've had many of those, but none were so foundational as Harriet Wolff, the longest-serving English teacher at Toronto's first alternative school, SEED School, whence I graduated after a mere seven years of instruction.

Harriet was a big part of why I spent seven years getting a four year diploma. She was such a brilliant English teacher, and presided over such an excellent writing workshop, that I felt like I still had so much to learn from high school, even after I'd amassed enough credits to graduate, so I just stuck around.

Harriet died this summer:

https://obituaries.thestar.com/obituary/harriet-wolff-1093038534

We hadn't spoken much over the past decade, though she did come to my wedding and was every bit as charming and wonderful as I'd remembered her. Despite not having spoken to her in many years, hardly a day went by without my thinking of her and the many lessons she imparted to me.

Harriet took a very broad view of what could be good writing. Though she wasn't much of a science fiction fan, she always took my sf stories seriously – as seriously as she took the more "literary" fiction and poetry submitted by my peers. She kept a filing cabinet full of mimeographs and photocopies, each excellent examples of various forms of writing. Over the years, she handed me everything from Joan Didion essays to especially sharp op-eds from Time Magazine, along with tons of fiction.

Harriet taught me how to criticize fiction, as a means of improving my understand of what I was doing with my writing, and as a way of exposing other writers to new ways of squeezing their own big, numinous, irreducible feelings out of their fingertips and out onto the page. She was the first person I called when I sold my first story, at 17, and I still remember standing on the lawn of my parents' house, cordless phone in one hand and acceptance letter in the other, and basking in her approval.

Harriet was a tough critiquer. Like many of the writers in her workshop, I had what you might call "glibness privilege" – a facility with words that I could use to paper over poor characterization or plotting. Whenever I'd do this, she'd fix me with her stare and say, "Cory, this is merely clever." I have used that phrase countless times – both in relation to my own work and into the work of my students.

Though Harriet was unsparing in her critiques, they never stung, because she always treated the writers in her workshop as her peers in a lifelong journey to improve our craft. She'd come out for cigarettes with us, and she came to every house party I invited her to, bringing a good, inexpensive bottle of wine and finding a sofa to sit on and discuss writing an literature. She invited me to Christmas dinner one year when I was alone for the holidays and introduced me to Yorkshire pudding, still one of my favorite dishes (though none has ever matched the pleasure of eating that first one from her oven).

Harriet apparently told her family that she didn't want a memorial, though from emails with her former students, I know that there might end up being something planned in Toronto. After all, memorials are for the living as much as for the dead. It's unlikely I'll be home for that one, but of course, the best way to memorialize Harriet is in writing.

For Harriet, writing was a big, big church, and every kind of writing was worth serious attention. I always thought of the web as a very Wolffian innovation, because it exposed so many kinds of audiences to so many kinds of writers. There's Kate Wagner's acerbic Zillow annotations, of course, but also so much more.

One of the web writers I've followed since the start is Kevin Kelly, who went from The Whole Earth Review to serving as Wired's first executive editor. Over the years, Kevin has blazed new trails for those of us who write in public, publishing many seminal pieces online. But Kevin was and is a print guy, who has blazed new trails in self-publishing, producing books that are both brilliant and beautifully wrought artifacts, like his giant, three-volume set of photos of "Vanishing Asia":

https://vanishing.asia/the-making-of-vanishing-asia/

This week, Kelly published one of his famous soup-to-nuts guides to a subject: "Everything I Know about Self-Publishing":

https://kk.org/thetechnium/everything-i-know-about-self-publishing/

It's a long, thoughtful, and extremely practical guide that is full of advice on everything from printing to promo. I've self-published several volumes, and I learned a lot.

One very important writer who's trying something new this summer – to wonderful effect – is Hilary J Allen, a business law professor at American University. During the first cryptocurrency bubble, Allen wrote some of the sharpest critiques of fintech, dubbing it "Shadow Banking 2.0":

https://pluralistic.net/2022/03/02/shadow-banking-2-point-oh/#leverage

Allen also coined the term "driverless finance," a devastatingly apt description of the crypto bro's desire for a financial system with no governance, which she expounded upon in a critical book:

https://driverlessfinancebook.com/

This summer, Allen has serialized "FinTech Dystopia," which she called "A summer beach read about Silicon Valley ruining things." Chapter 9 dropped this week, "Let’s Get Skeptical":

https://fintechdystopia.com/chapters/chapter9.html

It's a tremendous read, and while it mostly concerns itself with summarizing her arguments against the claims of fintech boosters, there's an absolutely jaw-dropped section on Neom, the doomed Saudi megaproject to build a massive "linear city" in the desert:

More than 21,000 workers (primarily from India, Bangladesh, and Nepal) are reported to have died working on NEOM and related projects in Saudi Arabia since 2017, with more than 20,000 indigenous people reported to have been forcibly displaced to make room for the development.

Allen offers these statistics as part of her critique of the "Abundance agenda," which focuses on overregulation as the main impediment to a better world. Like Allen, I'm not afraid to criticize bad regulation, but also like Allen, I'm keenly aware of the terrible harms that arise out of a totally unregulated system.

The same goes for technology, of course. There's plenty of ways to use technology that is harmful, wasteful and/or cruel, but that isn't a brief against technology itself There are many ways that technology has been used (and can be used) to make things better. One of the pioneers of technology for good is Jim Fruchterman, founder of the venerable tech nonprofit Benetech, for which he was awarded a Macarthur "Genius" award. Fruchterman has just published his first book, with MIT Press, in which he sums up a lifetime's experience in finding ways to improve the world with technology. Appropriately enough, it's called Technology For Good:

https://mitpress.mit.edu/9780262050975/technology-for-good/

After all, technology is so marvelously flexible that there's always a countertechnology for every abusive tech. Every 10-foot digital wall implies an 11-foot digital ladder. Last month, I wrote about Echelon, a company that makes digitally connected exercise bikes, who had pushed a mandatory update to their customers' bikes that took away functionality they got for free and sold it back to them in inferior form:

https://pluralistic.net/2025/07/26/manifolds/#bark-chicken-bark

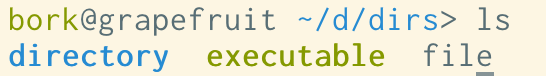

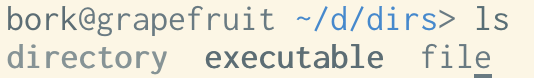

Repair hero Louis Rossman – who is running a new, direct action right to repair group named Fulu – offered a $20,000 bounty to anyone who could crack the firmware on an Echelon bike and create a disenshittified software stack that restored the original functionality:

https://www.youtube.com/watch?v=2zayHD4kfcA

In short order, app engineer Ricky Witherspoon, had cracked it, and had a way to continue to use SyncSpin, his popular app for Echelon bikes, which had been shut out by Echelon's enshittification. However, as Witherspoon told 404 Media's Jason Koebler, he won't release his code, not even for a $20,000 bounty, because doing so would make him liable to a $500,000 fine, and a five-year prison sentence, under Section 1201 of the Digital Millennium Copyright Act:

Fulu paid Witherspoon anyway (they're good eggs). Witherspoon told Koebler:

For now it’s just about spreading awareness that this is possible, and that there’s another example of egregious behavior from a company like this […] if one day releasing this was made legal, I would absolutely open source this. I can legally talk about how I did this to a certain degree, and if someone else wants to do this, they can open source it if they want to.

Free/open source software is a powerful tonic against enshittification, and it has the alchemical property of transforming the products of bad companies into good utilities that everyone benefits from.

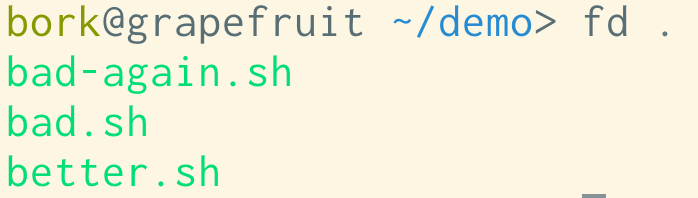

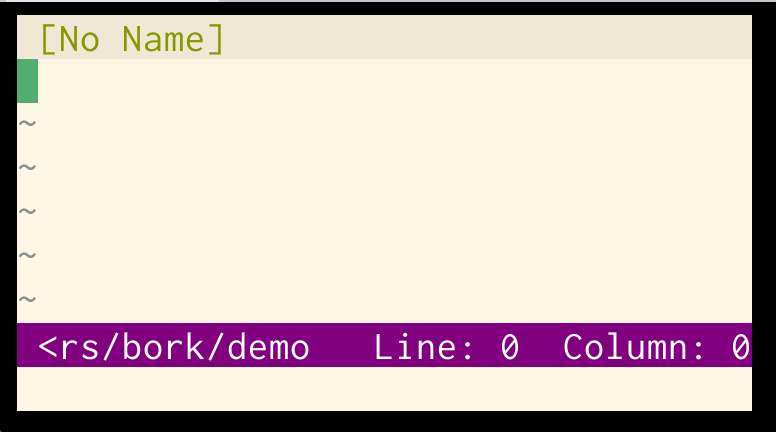

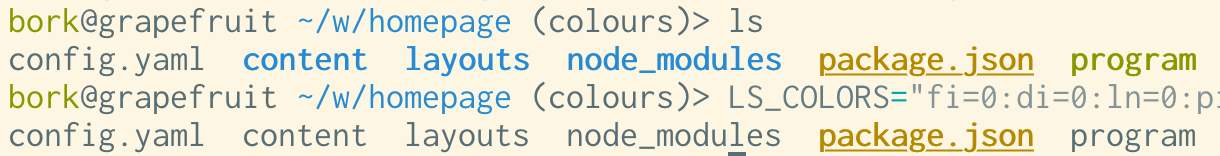

One example of this is Whisper, an open source audio transcription model released by Openai. Since Whisper's release, free software hackers have made steady – even remarkable – improvements to it. I discovered Whisper earlier this summer, when I couldn't locate a quote I'd heard on a recent podcast that I wanted to reference in a column. I installed Whisper on my laptop and fed it the last 30+ hours' worth of podcasts I'd listened to. An hour later, it had fully transcribed all of them, with timecode, and had put so little load on my laptop that the fan didn't even turn on. I was able to search all that text, locate the quote, and use the timecode to find the clip and check the transcription.

Whisper has turned extremely accurate transcription into a utility, something that can just be added to any program or operating system for free. I think this is going to be quietly revolutionary, bringing full-text search and captioning to audio and video as something we can just take for granted. That's already happening! FFMpeg is the gold-standard free software tool for converting, encoding and re-encoding video, and now the latest version integrates Whisper, allowing FFMpeg to subtitle your videos on the fly:

https://www.theregister.com/2025/08/28/ffmpeg_8_huffman/

Whisper is an example of the "residue" that will be left behind when the AI bubble pops. All bubbles pop, after all, but not all bubbles leave behind a useful residue. When crypto dies, its residue will be a few programmers who've developed secure coding habits in Rust, but besides that, all that will be left behind is terrible Austrian economics and worse monkey JPEGs:

https://pluralistic.net/2023/12/19/bubblenomics/#pop

But the free/open source code generated by stupid and/or evil projects often lives on long after those projects are forgotten. And lots (most) of free/open code is written for good purposes.

Take Madeline, a platform for tracking loans made by co-operatives, produced by the Seed Commons, which is now used by financial co-ops around the world, as they make "non-extractive investments in worker and community-owned businesses on the ground":

Madeline (and Seed Commons) are one of those bright lights that are easy to miss in these brutal and terrifying times. And if that's not enough, there's always booze. If you're thinking of drowning your sorrows, you could do worse than to pour your brown liquor out of a decanter shaped like a giant Atari CX-10 joystick:

https://atari.com/products/atari-joystick-decanter-set

That's the kind of brand necrophilia that could really enhance a night's drinking.

Object permanence (permalink)

#20yrago 5.25″ floppies make great CD sleeves https://web.archive.org/web/20050924144644/http://www.readymademag.com/feature_18_monkey.php

#20yrsago Hollywood can break down any door in Delhi https://web.archive.org/web/20050903065949/https://www.eff.org/deeplinks/archives/003943.php

#20yrsago Side-band attack tips virtual Blackjack dealer’s hand https://web.archive.org/web/20051119111417/https://haacked.com/archive/2005/08/29/9748.aspx

#20yrsago Judge to RIAA: Keep your “conference center” out of my court https://web.archive.org/web/20051001031307/http://www.godwinslaw.org/weblog/archive/2005/08/29/runaround-suits

#15yrsago Which ebook sellers will allow publishers and writers to opt out of DRM? https://www.publishersweekly.com/pw/by-topic/columns-and-blogs/cory-doctorow/article/44012-doctorow-s-first-law.html

#15yrsago 10 Rules for Radicals: Lessons from rogue archivist Carl Malamud https://public.resource.org/rules/

#15yrsago Homeowners’ associations: hives of petty authoritarianism https://web.archive.org/web/20100606170504/http://theweek.com/article/index/104150/top-7-insane-homeowners-association-rules

#15yrsago Lynd Ward’s wordless, Depression-era woodcut novels https://memex.craphound.com/2010/08/29/lynd-wards-wordless-depression-era-woodcut-novels/#5yrsago

#10yrago Suit: Wells Fargo sent contractors to break into our house, loot family treasures rescued from Nazis https://theintercept.com/2015/08/28/wells-fargo-contractors-stole-family-heirlooms/

#10yrsago Texas doctor’s consent form for women seeking abortions https://memex.craphound.com/wp-content/uploads/2020/09/3kscWU5-2-scaled.jpg

#10yrsago Spear phishers with suspected ties to Russian government spoof fake EFF domain, attack White House https://www.eff.org/deeplinks/2015/08/new-spear-phishing-campaign-pretends-be-eff

#10yrsago Rowlf the dog gives a dramatic reading of “Grim Grinning Ghosts.” https://www.youtube.com/watch?v=CPMTEJ_IAAU

#5yrsago California's preventable fires https://pluralistic.net/2020/08/29/chickenized-home-to-roost/#cal-burning

Upcoming appearances (permalink)

- Ithaca: AD White keynote (Cornell), Sep 12

https://deanoffaculty.cornell.edu/events/keynote-cory-doctorow-professor-at-large/ -

DC: Enshittification at Politics and Prose, Oct 8

https://politics-prose.com/cory-doctorow-10825 -

NYC: Enshittification with Lina Khan (Brooklyn Public Library), Oct 9

https://www.bklynlibrary.org/calendar/cory-doctorow-discusses-central-library-dweck-20251009-0700pm -

New Orleans: DeepSouthCon63, Oct 10-12

http://www.contraflowscifi.org/ -

Chicago: Enshittification with Anand Giridharadas (Chicago Humanities), Oct 15

https://www.oldtownschool.org/concerts/2025/10-15-2025-kara-swisher-and-cory-doctorow-on-enshittification/ -

San Francisco: Enshittification at Public Works (The Booksmith), Oct 20

https://app.gopassage.com/events/doctorow25 -

Miami: Enshittification at Books & Books, Nov 5

https://www.eventbrite.com/e/an-evening-with-cory-doctorow-tickets-1504647263469

Recent appearances (permalink)

- Cory Doctorow DESTROYS Enshittification (QAA Podcast)

https://soundcloud.com/qanonanonymous/cory-doctorow-destroys-enshitification-e338 -

Divesting from Amazon’s Audible and the Fight for Digital Rights (Libro.fm)

https://pocketcasts.com/podcasts/9349e8d0-a87f-013a-d8af-0acc26574db2/00e6cbcf-7f27-4589-a11e-93e4ab59c04b -

The Utopias Podcast

https://www.buzzsprout.com/2272465/episodes/17650124

Latest books (permalink)

- "Picks and Shovels": a sequel to "Red Team Blues," about the heroic era of the PC, Tor Books (US), Head of Zeus (UK), February 2025 (https://us.macmillan.com/books/9781250865908/picksandshovels).

-

"The Bezzle": a sequel to "Red Team Blues," about prison-tech and other grifts, Tor Books (US), Head of Zeus (UK), February 2024 (the-bezzle.org).

-

"The Lost Cause:" a solarpunk novel of hope in the climate emergency, Tor Books (US), Head of Zeus (UK), November 2023 (http://lost-cause.org).

-

"The Internet Con": A nonfiction book about interoperability and Big Tech (Verso) September 2023 (http://seizethemeansofcomputation.org). Signed copies at Book Soup (https://www.booksoup.com/book/9781804291245).

-

"Red Team Blues": "A grabby, compulsive thriller that will leave you knowing more about how the world works than you did before." Tor Books http://redteamblues.com.

-

"Chokepoint Capitalism: How to Beat Big Tech, Tame Big Content, and Get Artists Paid, with Rebecca Giblin", on how to unrig the markets for creative labor, Beacon Press/Scribe 2022 https://chokepointcapitalism.com

Upcoming books (permalink)

- "Canny Valley": A limited edition collection of the collages I create for Pluralistic, self-published, September 2025

-

"Enshittification: Why Everything Suddenly Got Worse and What to Do About It," Farrar, Straus, Giroux, October 7 2025

https://us.macmillan.com/books/9780374619329/enshittification/ -

"Unauthorized Bread": a middle-grades graphic novel adapted from my novella about refugees, toasters and DRM, FirstSecond, 2026

-

"Enshittification, Why Everything Suddenly Got Worse and What to Do About It" (the graphic novel), Firstsecond, 2026

-

"The Memex Method," Farrar, Straus, Giroux, 2026

-

"The Reverse-Centaur's Guide to AI," a short book about being a better AI critic, Farrar, Straus and Giroux, 2026

Colophon (permalink)

Today's top sources:

Currently writing:

- "The Reverse Centaur's Guide to AI," a short book for Farrar, Straus and Giroux about being an effective AI critic. (747 words yesterday, 46239 words total). FIRST DRAFT COMPLETE

-

A Little Brother short story about DIY insulin PLANNING

This work – excluding any serialized fiction – is licensed under a Creative Commons Attribution 4.0 license. That means you can use it any way you like, including commercially, provided that you attribute it to me, Cory Doctorow, and include a link to pluralistic.net.

https://creativecommons.org/licenses/by/4.0/

Quotations and images are not included in this license; they are included either under a limitation or exception to copyright, or on the basis of a separate license. Please exercise caution.

How to get Pluralistic:

Blog (no ads, tracking, or data-collection):

Newsletter (no ads, tracking, or data-collection):

https://pluralistic.net/plura-list

Mastodon (no ads, tracking, or data-collection):

Medium (no ads, paywalled):

Twitter (mass-scale, unrestricted, third-party surveillance and advertising):

Tumblr (mass-scale, unrestricted, third-party surveillance and advertising):

https://mostlysignssomeportents.tumblr.com/tagged/pluralistic

"When life gives you SARS, you make sarsaparilla" -Joey "Accordion Guy" DeVilla

READ CAREFULLY: By reading this, you agree, on behalf of your employer, to release me from all obligations and waivers arising from any and all NON-NEGOTIATED agreements, licenses, terms-of-service, shrinkwrap, clickwrap, browsewrap, confidentiality, non-disclosure, non-compete and acceptable use policies ("BOGUS AGREEMENTS") that I have entered into with your employer, its partners, licensors, agents and assigns, in perpetuity, without prejudice to my ongoing rights and privileges. You further represent that you have the authority to release me from any BOGUS AGREEMENTS on behalf of your employer.

ISSN: 3066-764X

![Two emails, with subject lines “Message Blocked” and (all caps) “[Message blocked due to policy]”](https://storage.mollywhite.net/micro/4438dd5eae9a48e5a81e_message-blocked.png)